The Mixed Reality Future We Predicted is Here

Produced in June 2013, this short experience concept predicted what future AR/VR/MR interfaces might look like by the year 2020. Relevant augmented information would be displayed by an AI anticipating user needs through geo-location, biometrics and the mobile web. Of course the Stones will never go out of style.

As the anticipated launch of the Apple Vision Pro nears, our excitement for the mixed reality, AR/VR/MR future we predicted over 10 years ago is hard to contain. For (sphere), it has never been a matter of “if” a pervasive mixed reality future would happen, but “when”. The Apple Vision Pro may be the best bet to push the adoption of this platform into the mainstream. Ubiquitous computing devices like the Apple Vision Pro will allow real-time augmented information and access to alternative realities no matter where we are in the real world. These alternate realities will not be distinct destinations (like what was depicted in the movie Tron or even Meta’s Horizon Worlds) but an instant and pervasive experience that follows us everywhere built on top of the mobile web.

Every once in a while we are presented with a “dream” project brief and this was the case when one of our favorite ram clients asked us back in 2013 to predict the future of user interface and user experience for the Year 2020. Our research indicated that by 2020, experiences would be even more valuable than objects and that wearables that supported augmented and virtual reality experiences would emerge as a major computing platform shift (now coined Spatial Computing by Apple). Facebook’s rebrand to Meta in the fall of 2021 helped validate our findings and, Apple’s upcoming entry into mixed reality will likely convert many skeptics into believers.

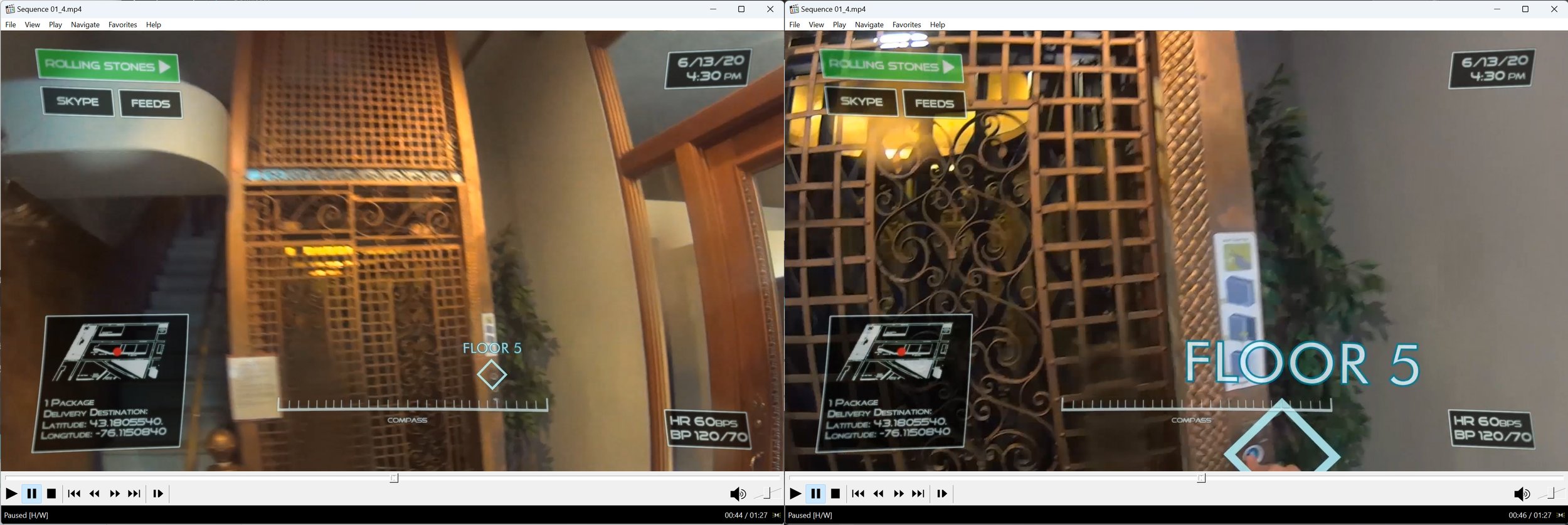

Presented from the point of view of a bike courier delivering a package, a heads up display (HUD) would provide base level information (map, compass, biometrics, date, time, AV feeds). The AI would anticipate the user’s geographic destination and provide augmented wayfinding information as needed.

The wayfinding graphics increase in size as the user approaches the interaction points.

Sound is a separate augmented layer monitored by biometrics, location and task at hand. In this example, as the user’s heartrate lowers and they near their final destination, the AI automatically lowers the audio volume and may provide an alternative music track that better matches their situation (a personal soundtrack).

The AI would automatically identify the appropriate recipient through facial recognition and provide a confirmed digital receipt of delivery.

The knowledge gained from this project became the catalyst and driving force that led us on this amazing journey we call (sphere). We believed in this future so much that we secured our first (sphere) utility patent; Wireless Immersive Capture and Viewing - a system that allows you to safely walk around the real world while being immersed in a virtual one. We discovered that in order to do this, we had to streamline the capture side so we concentrated on the hardest part, the optics. (sphere)’s patented omnidirectional optics technology allows for more accurate, faster and efficient ML processing to streamline embedded AI decision-making at the Edge. We founded the company upon this vision of the future and have been waiting for the rest of the world to catch up. I think it is happening.

This is the first part in a series of posts that document the history of (sphere). Next we will reveal some of the initial research that helped guide us.